GNU Radio Frame Generation for Simulations

When doing simulations with GNU Radio, one can easily run into problems if asynchronous messages are involved. I already wrote about limitations of the scheduler, which, in essence, cause run to completion flow graphs to run forever.

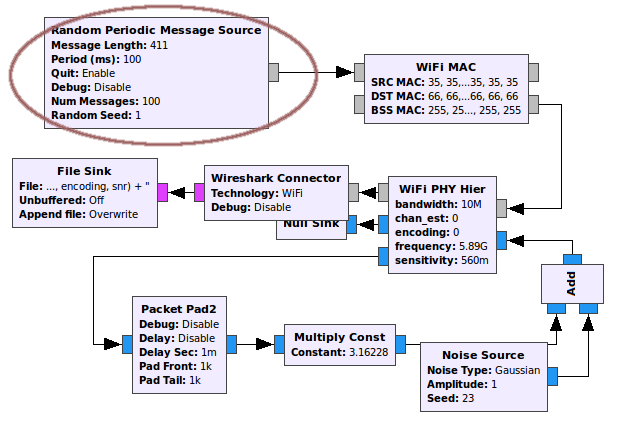

Another problem is the lack of a back-pressure mechanism for aysnchronous messages. Consider the following flow graph, which I used for WiFi simulations over an AWGN channel. It sends 100 frames at a configurable SNR and logs the received ones in a PCAP file.

The problem is the rate at which frames are injected into the WiFi MAC block. There is no way for the message source to know when previous frames were processed. Therefore, messages might pile-up in the input queue of the MAC block and at some point will get dropped, leading to wrong simulation results.

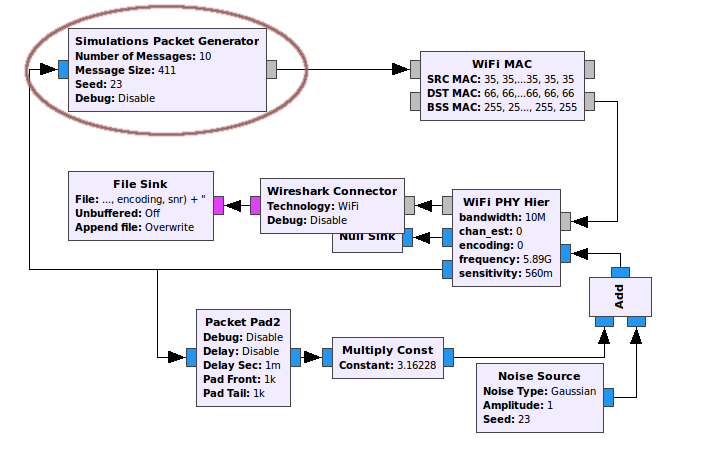

My current workaround is to use a feedback mechanism as shown in this flow graph.

It exploits the fact that once the message is transformed to stream-domain, back-pressure works and no samples are dropped. (Also with normal flow graphs, samples are dropped by SDR hardware blocks when they cannot put the samples into the flow graph fast enough, but not inside the flow graph.) When the flow graph starts, the block generates one initial message to get the simulations started. After that, it waits until the frame is generated by the PHY layer (i.e. the frame is transformed in stream domain) and feed back into the packet generator. The generator uses the feedback as a notification when to inject the next frame; think of a turbocharger.

Unfortunately, that doesn’t solve all problems, as it only works around the back-pressure problem on transmit side. As you can see, the Wireshark Connector also has a message port input, which might still pile-up messages. While this was no problem in practice, it shows that the solution is not perfect if the flow graph has multiple separate parts that use asynchronous messages.

Another option is setting the maximum message queue size through GNU Radio’s preferences. In theory, it is possible to adjust the size according to the number of frames to be sent and push all messages into the queue. But, of course, that doesn’t really scale and, like the other approach, does not solve the underlying problem.