Our subproject of the Collaborative Research Center MAKI got funded :-)

It’s the first time I can hire a PhD student. So I’m looking for motivated candidates, who want to build fancy SDR prototypes and explore new concepts for SDR runtimes.

If you’re interested, drop me an email.

Josh Morman and I gave an update on our current efforts to build a new more modular runtime for GNU Radio that comes with pluggable schedulers and native support for accelerators.

If you’re interested in the topic

This is the second post on FutureSDR, an experimental async SDR runtime, implemented in Rust.

While the previous post gave a high-level overview of the core concepts, this and the following posts will be about more specific topics.

Since I just finished integration of AXI DMA custom buffers for FPGA acceleration, we’ll discuss this.

The goal of the post is threefold:

- Show that the custom buffers API, presented in the previous post, can easily be generalized to different types of accelerators.

- Show that FutureSDR can integrate the various interfaces and mechanisms to communicate between the FPGA and the CPU.

- Provide a complete walk-through of a minimal example that demonstrates how FPGAs can be integrated into SDR applications. (I didn’t find anything on the this topic and hope that this shows the big picture and helps to get started.)

The actual example is simple. We’ll use the FPGA to add 123 to an 32-bit integer.

The data will be DMA’ed to the FPGA, which will stream the integers through the adder and copy the data back to CPU memory.

It’s only slightly more interesting than a loopback example, but the actual FPGA implementation is, of course, not the point.

There are drop-in replacements for FIR filters, FFTs, and similar DSP cores.

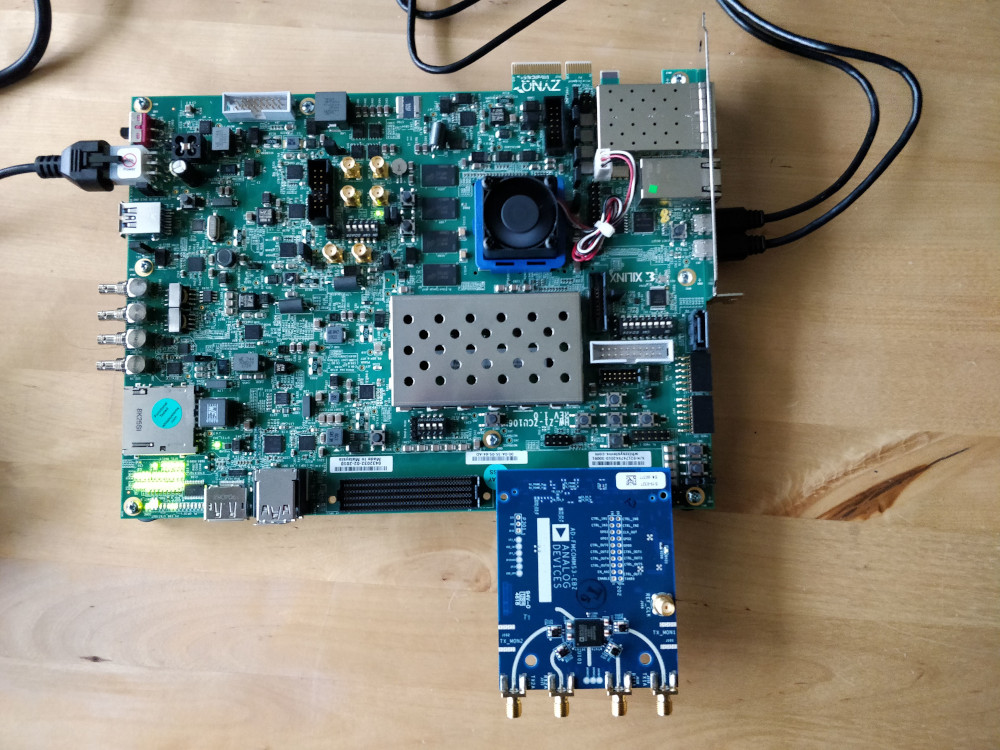

I only have a Xilinx ZCU106 board with a Zynq UltraScale+ MPSoC.

But the example doesn’t use any fancy features and should work on any Zynq platform.

read more

In the last year, I’ve been thinking about how GNU Radio and SDR runtimes in general could improve.

I had some ideas in mind that I wanted to test with quick prototypes.

But C++ and rapid prototyping don’t really go together, so I looked into Rust and created a minimalistic SDR runtime from scratch.

Even though I’m still very bad at Rust, it was a lot of fun to implement.

One of the core ideas for the runtime was to experiment with async programming, which Rust supports through Futures.

Hence the name FutureSDR.

(Other languages might call similar constructs promises or co-routines.)

The runtime uses futures and it is a future, in the sense that it’s a placeholder for something that is not ready yet :-)

FutureSDR is minimalistic and has far less features than GNU Radio.

But, at the same time, it’s only ~5100 lines of code and served me well to quickly test ideas.

I, furthermore, just implemented basic accelerator support and finally have the feeling that the current base design makes some sense.

Overall, I think it might have reached a state where it might be useful to experiment with SDR runtimes.

So this post gives a bit of an overview and introduces the main ideas.

In follow-up posts, I want to go in more detail on specific features and have an associated git commit introducing this feature.

Note that the code examples might not compile exactly as-is (e.g. I leave some irrelevant function parameters out).

Also there is, at the moment, no proper error handling (i.e., unwrap() all over the place).

read more

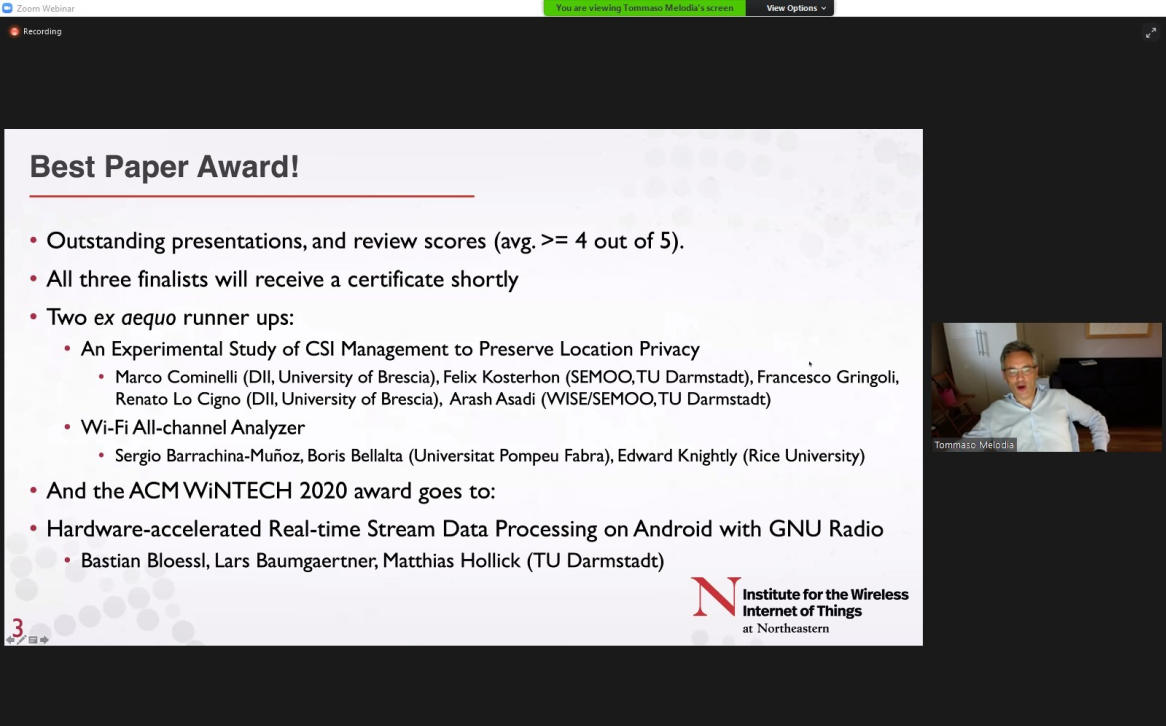

I’m happy to receive the Best Paper Award of ACM WiNTECH 2020 – a workshop on wireless testbeds that is held in conjunction with ACM MobiCom – for my paper about GNU Radio on Android.

I’m particularly happy, because I think it’s really great that this type of paper is appreciated at an academic venue 🍾 🍾 🍾

-

Bastian Bloessl, Lars Baumgärtner and Matthias Hollick, “Hardware-Accelerated Real-Time Stream Data Processing on Android with GNU Radio,” Proceedings of 14th International Workshop on Wireless Network Testbeds, Experimental evaluation and Characterization (WiNTECH’20), London, UK, September 2020.

[DOI, BibTeX, PDF and Details…]

Bastian Bloessl, Lars Baumgärtner and Matthias Hollick, “Hardware-Accelerated Real-Time Stream Data Processing on Android with GNU Radio,” Proceedings of 14th International Workshop on Wireless Network Testbeds, Experimental evaluation and Characterization (WiNTECH’20), London, UK, September 2020.

[DOI, BibTeX, PDF and Details…]

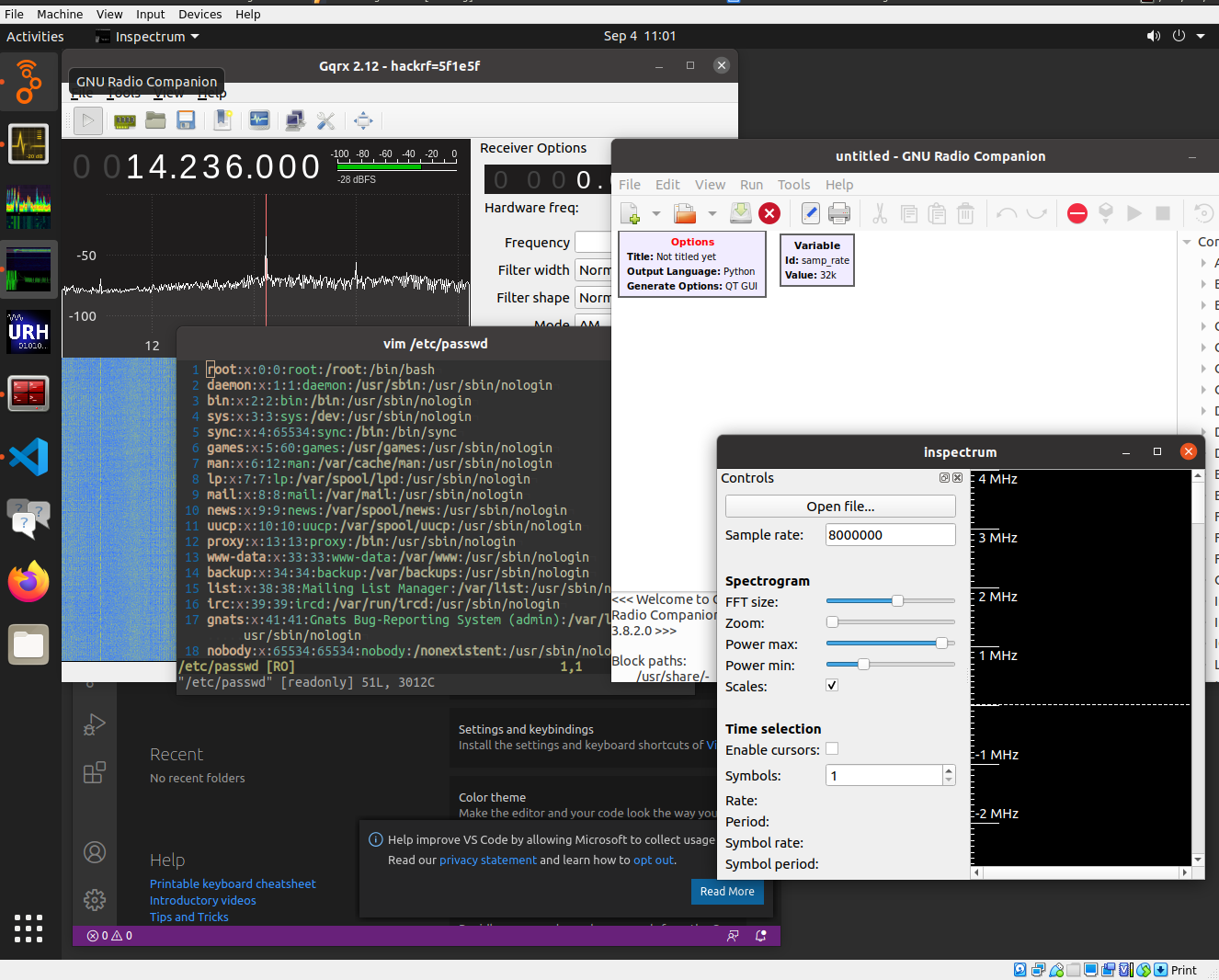

I recently updated instant-gnuradio, a project that uses Packer to create a VM with GNU Radio and many SDR-related applications pre-installed.

I wanted to do that for quite a while, but GRCon20 was a good occasion, since we wanted to provide an easy to use environment to get started with SDR anyway.

I didn’t maintain the project in the last year, not even porting it to GNU Radio 3.8.

This was mainly since the build relied on PyBombs, which was not maintained and broke every other day.

(This is about to change btw, since we have two new very active maintainers. Yay!).

But recently distribution packaging has improved quite a lot.

The new build uses Josh’s PPAs, which provide recent GNU Radio versions.

With this, the build is faster, simpler, and hopefully also more stable.

I also updated to Ubuntu 20.04 and added VS Code. Like the previous version it comes with Intel OpenCL preinstalled, supporting Fosphor out of the box.

The biggest advantage of the Packer build is that it is easy to customize the VM for workshops or courses (e.g., change branding like the background image, add a link to the course website in the favorites bar, install additional software, etc).

A good example might be the VM, I built for Daniel Estévez’s GRCon workshop on satellite communications.

Some months ago, I looked into Tom Rondeau’s work on running GNU Radio on Android.

It took me quite some time to understand what was going, but I finally managed to put all pieces together and have GNU Radio running on my phone. A state-of-the-art real-time stream-data processing system and a comprehensive library of high-quality DSP blocks on my phone – that’s pretty cool. And once the toolchain is set up, it’s really easy to build SDR apps.

I put quite some work into the project, updated everything to the most recent version and added various new features and example applications. Here are some highlights of the current version:

- Supports the most recent version of GNU Radio (v3.8).

- Supports 32-bit and 64-bit ARM architectures (i.e.,

armeabi-v7a and arm64-v8a).

- Supports popular hardware frontends (RTL-SDR, HackRF, and Ettus B2XX). Others can be added if there is interest.

- Supports interfacing Android hardware (mic, speaker, accelerometer, …) through gr-grand.

- Does not require to root the device.

- All signal processing happens in C++ domain.

- Provides various means to interact with a flowgraph from Java-domain (e.g., Control Port, PMTs, ZeroMQ, TCP/UDP).

- Comes with a custom GNU Radio double-mapped circular buffer implementation, using Android shared memory.

- Benefits from SIMD extensions through VOLK and comes with a profiling app for Android.

- Benefits from OpenCL through gr-clenabled.

- Includes an Android app to benchmark GNU Radio runtime, VOLK, and OpenCL.

- Includes example applications for WLAN and FM.

I think this is pretty complete. To demonstrate that it possible to run non-trivial projects on a phone, I added an Android version of my GNU Radio WLAN transceiver. If it runs in real-time depends, of course, on the processor, the bandwidth, etc.

Development Environment

The whole project is rather complex. To make it more accessible and hopefully more maintainable, I

created a git repository with build scripts for the toolchain. It includes GNU Radio and all its dependencies as submodules pointing (if necessary) to patched versions of the library.

created a Dockerfile that can be used to setup a complete development environment in a container. It includes the toolchain, Android Studio, the required Android SDKs, and example applications.

The project is now available on GitHub.

It was a major effort to put everything together, cleanup the code, and add some documentation. There might be rough edges, but I’m happy to help and look forward to feedback.

If you’re using the GNU Radio Android toolchain in your work, I’d appreciate a reference to:

-

Bastian Bloessl, Lars Baumgärtner and Matthias Hollick, “Hardware-Accelerated Real-Time Stream Data Processing on Android with GNU Radio,” Proceedings of 14th International Workshop on Wireless Network Testbeds, Experimental evaluation and Characterization (WiNTECH’20), London, UK, September 2020.

[DOI, BibTeX, PDF and Details…]

Bastian Bloessl, Lars Baumgärtner and Matthias Hollick, “Hardware-Accelerated Real-Time Stream Data Processing on Android with GNU Radio,” Proceedings of 14th International Workshop on Wireless Network Testbeds, Experimental evaluation and Characterization (WiNTECH’20), London, UK, September 2020.

[DOI, BibTeX, PDF and Details…]

In the last post, we setup a environment to conduct stable and reproducible performance measurements.

This time, we’ll do some first benchmarks.

(A more verbose description of the experiments is available in [1]. The code and evaluation scripts of the measurements are on GitHub.)

The simplest and most accurate thing we can do is to measure the run time of a flowgraph when processing a given workload.

It is the most accurate measure, since we do not have to modify the flowgraph or GNU Radio to introduce measurement probes.

The problem with benchmarking a real-time signal processing system is that monitoring inevitably changes the system and, therefore, its behavior.

It’s like the system behaves differently when you look at it.

This is not an academic problem.

Just recording a timestamp can introduce considerable overhead and change scheduling behavior.

In the first experiment, we consider flowgraph topologies like this.

They allow to scale the number of pipes (i.e., parallel streams) and stages (i.e., blocks per pipe).

All flowgraphs are created programmatically with a C++ application to avoid any possible impact from Python bindings.

My system is based on Ubuntu 19.04 and runs GNU Radio 3.8, which was compiled with GCC 8.3 in release mode.

GNU Radio supports two connection types between block: a message passing interface and a ring buffer-based interface.

We start with the message passing interface.

Since we want to focus on the scheduler and not on DSP performance, we use PDU Filter blocks, which just forward messages through the flowgraph.

Our messages, of course, do not contain the key that would be filtered out.

(Update: And this is, of course, where I messed up in the initial version of the post.)

I, therefore, created a custom block that just forwards messages through the flowgraph and added a debug switch to make sure that this doesn’t happen again.

Before starting the flowgraph, we create and enqueue a given number of messages in the first block.

Finally, we also enqueue a done message, which signals the scheduler to shutdown the block.

We used 500 Byte blobs, but since messages are forwarded through pointers, the type of the message does not have a sizeable impact.

The run time of top_block::run() looks like this:

Note that we scaled the number of pipes and stages jointly, i.e., an x-axis value of 100 corresponds to 10 pipes and 10 stages.

The error bars indicate confidence intervals of the mean for a confidence level of 95%.

The good news are: GNU Radio scales rather well, even for a large number of threads.

In this case, up to 400 threads on 4 CPU cores.

While it is worse than linear, we were not able to reproduce the horrendous results presented at FOSDEM.

These measurements were probably conducted with an earlier version of GNU Radio, containing a bug that caused several message passing blocks to busy-wait.

With this bug, it is reasonable that performance collapsed, once the number of blocks exceeds the number of CPUs.

We will investigate it further in the following posts.

We, furthermore, can see the that difference between normal and real-time priority is only marginal.

Not so for the buffer interface.

Here, we used a Null Source followed by a Head block to pipe 100e6 4-byte floats into the flowgraph.

To focus on the scheduler and not on DSP, we used Copy blocks in the pipes, which just copy the samples from the input to the output buffer.

Each pipe was, furthermore, terminated by a Null Sink.

Like in the previous experiment, we scale the number of pipes and stages jointly and measure the run time of top_block::run().

Again, good news: Also the performance of the buffer interfaces scales linearly with the number of blocks. (Even though we will later see that there is quite some overhead from thread synchronization.)

What’s not visible from the plot is that even with the CPU governor performance, the CPU might reduce the frequency due to heat issues.

So while the confidence intervals indicate that we have a good estimate of the mean, the underlying individual measurements were bimodal (or multi-modal), depending on the CPU frequency.

What might be surprising is that real-time priority performs so much better.

For 100 blocks (10 pipes and 10 stages), it is about 50% faster.

Remember, we created a dedicated CPU set for the measurements to avoid any interference from the operating system.

So there are no priority issues.

To understand what’s going on, we have to look into the Linux process scheduler.

But that’s topic for a later post.

-

Bastian Bloessl, Marcus Müller and Matthias Hollick, “Benchmarking and Profiling the GNU Radio Scheduler,” Proceedings of 9th GNU Radio Conference (GRCon 2019), Huntsville, AL, September 2019.

[BibTeX, PDF and Details…]

I recently started at the Secure Mobile Network Lab at TU Darmstadt, where I work on Software Defined Wireless Networks.

For the time, I look into real-time data processing on normal PCs (aka the GNU Radio runtime).

I’m really happy for the chance to work in this area, since it’s something that interests me for years.

And, now, I have some time to have a close look at the topic.

In the last weeks, I experimented quite a lot with the GNU Radio runtime and new tools that helped me to get some data out of it.

Since I didn’t find a lot of information, I wanted to start a series of blog post about the more technical bits.

(A more verbose description of the setup is available in [1]. The code and scripts are also on GitHub.)

Setting up the Environment

The first question was how to setup an environment that allows me to conduct reproducible performance measurements.

(While this was my main goal, I think most information in this post can also be useful to get a more stable and deterministic throughput of your flow graph.)

Usually, GNU Radio runs on a normal operating system like Linux that’s doing a lot of stuff in the background.

So things like file synchronization, search indexes that are updated, cron jobs, etc. might interfere with measurements.

Ideally, I want to measure the performance of GNU Radio and nothing else.

CPU Sets

The first thing that I had to get rid of was interference from other processes that are running on the same system.

Fortunately, Linux comes with CPU sets, which allow to partition the CPUs and migrate all process to a system set, leaving the other CPUs exclusively for GNU Radio.

Also new processes will, by default, end up in the system set.

On my laptop, I have a 8 CPUs (4 cores with hyper-threads) and wanted to dedicate 2 cores with their hyper-threads to GNU Radio.

Initially, I assumed that CPUs 0-3 would correspond to the first two cores and 4-7 to the others.

As it turns out, this is not the case.

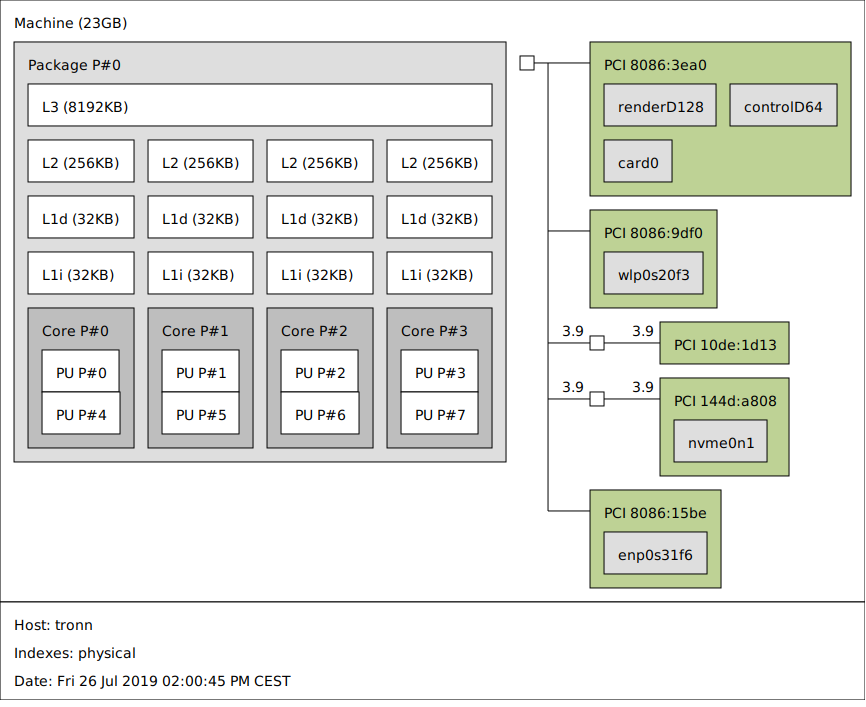

The lstopo command (on Ubuntu part of the hwloc package) gives a nice overview:

As we can see from the figure, it’s actually CPU 2,3,6,7 that correspond to core 2 and 3.

To create a CPU set sdr with CPUs 2,3,6, 7, I ran:

sudo cset shield --sysset=system --userset=sdr --cpu=2,6,3,7 --kthread=on

sudo chown -R root:basti /sys/fs/cgroup/cpuset

sudo chmod -R g+rwx /sys/fs/cgroup/cpuset

The latter two commands allow my user to start process in the SDR CPU set.

The kthread options tries to also migrate kernel threads to the system CPU set.

This is not possible for all kernel threads, since some have CPU-specific tasks, but it’s the best we can do.

Starting a GNU Radio flow graph in the SDR CPU set can be done with:

cset shield --userset=sdr --exec -- ./run_flowgraph

(As a side note: Linux has a kernel parameter isolcpus that allows to isolate CPUs from the Linux scheduler.

I also tried this approach, but as it turns out, it really means what it says, i.e., these cores are excluded from the scheduler.

When I started GNU Radio with an affinity mask of the whole set, it always ended up on a single core.

Without a scheduler, there are not task migrations to other cores, which renders this approach useless.)

IRQ Affinity

Another issue are interrupts.

If, for example, the GPU or network interface constantly interrupts the CPU where GNU Radio is running, we get lots of jitter and the throughput of the flow graph might vary significantly over time.

Fortunately, many interrupts are programmable, which means that they can be assigned to (a set of) CPUs.

This is called interrupt affinity and can be adjusted through the proc file system.

With watch -n 1 cat /proc/interrupts it’s possible to monitor the interrupt counts per CPU:

CPU0 CPU1 CPU2 CPU3 CPU4 CPU5 CPU6 CPU7

0: 12 0 0 0 0 0 0 0 IR-IO-APIC 2-edge timer

1: 625 0 0 0 11 0 0 0 IR-IO-APIC 1-edge i8042

8: 0 0 0 0 0 1 0 0 IR-IO-APIC 8-edge rtc0

9: 103378 43143 0 0 0 0 0 0 IR-IO-APIC 9-fasteoi acpi

12: 17737 0 0 533 67 0 0 0 IR-IO-APIC 12-edge i8042

14: 0 0 0 0 0 0 0 0 IR-IO-APIC 14-fasteoi INT34BB:00

16: 0 0 0 0 0 0 0 0 IR-IO-APIC 16-fasteoi i801_smbus, i2c_designware.0, idma64.0, mmc0

31: 0 0 0 0 0 0 0 100000 IR-IO-APIC 31-fasteoi tpm0

120: 0 0 0 0 0 0 0 0 DMAR-MSI 0-edge dmar0

[...]

In my case, I wanted to exclude the CPUs from the SDR CPU set from as many interrupts as possible.

So I tried to set a mask of 0x33 for all interrupts.

In binary, this corresponds to 0b00110011 and selects CPU 0,1,4,5 of the system CPU set.

for i in $(ls /proc/irq/*/smp_affinity)

do

echo 33 | sudo tee $i

done

Another potential pitfall is the irqbalance daemon, which might work against us by reassigning the interrupts to the CPU that we want to use for signal processing.

I, therefore, disabled the service during the measurements.

sudo systemctl stop irqbalance.service

CPU Governors

Finally, there is CPU frequency scaling, i.e., individual cores might adapt the frequencies based on the load of the system.

While this shouldn’t be an issue if the system is fully loaded, it might make a difference for bursty loads.

In my case, I mainly wanted to avoid initial transients and, therefore, set the CPU governor to performance, which should minimize frequency scaling.

#!/bin/bash

set -e

if [ "$#" -lt "1" ]

then

GOV=performance

else

GOV=$1

fi

CORES=$(getconf _NPROCESSORS_ONLN)

i=0

echo "New CPU governor: ${GOV}"

while [ "$i" -lt "$CORES" ]

do

sudo cpufreq-set -c $i -g $GOV

i=$(( $i+1 ))

done

I hope this was somewhat helpful.

In the next post will do some performance measurements.

-

Bastian Bloessl, Marcus Müller and Matthias Hollick, “Benchmarking and Profiling the GNU Radio Scheduler,” Proceedings of 9th GNU Radio Conference (GRCon 2019), Huntsville, AL, September 2019.

[BibTeX, PDF and Details…]

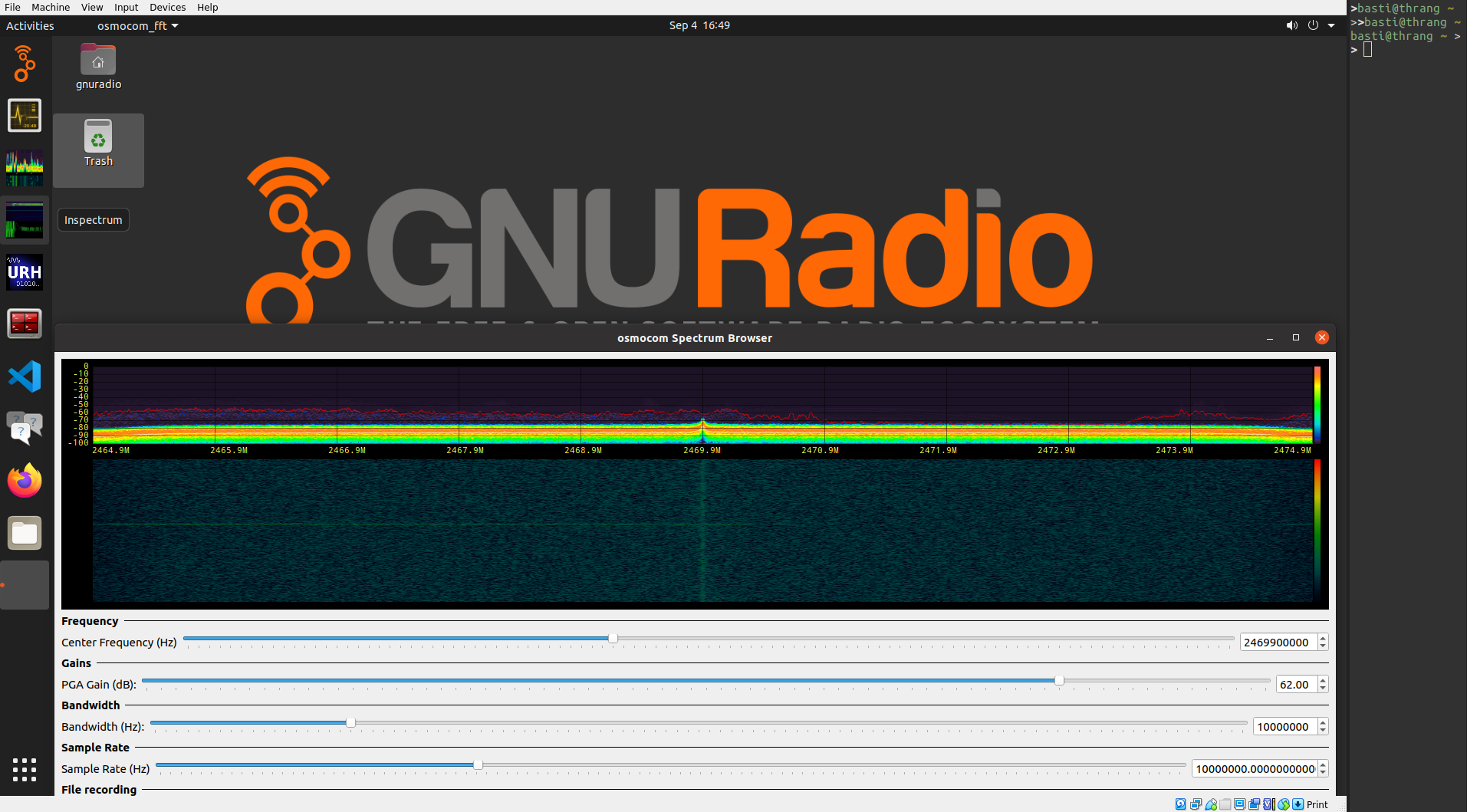

After porting my own out-of-tree modules to GNU Radio 3.8, I spent the weekend looking into gr-osmosdr and gr-fosphor – the two modules that held me back from switching to GNU Radio 3.8.

While it’s far from finished, I’m happy to have at least a working prototype.